I skipped half of this book, but you should definitely buy a copy.

Billion Dollar Lessons.

A book review of Billion Dollar Lessons

It isn’t enough to know that an idea is probably flawed. There has to be a method, agreed on ahead of time, for discussing possible problems and making sure they are given due weight. Otherwise, once a strategy starts to build momentum it will steamroll any possible objections.

If you’re short of time carefully read that paragraph again, take it to heart, and do something with it.

This is an idea that fascinates me, the generally poor quality of decision making in business - mainly as a recipient of such decisions - and the efforts of various individuals, groups, movements, or products to change that. For example I’ve been interested in “red team” thinking, or adversarial analysis, for some time - this is the process of actively trying to find problems with your decisions as you make them, and certainly before you implement them. This informs my interest in decision making, and my interest in wargaming, so I expected to really enjoy this book.

And I kind of did enjoy it, but not initially - the first section “Failure Patterns”, just didn’t do it for me, and I ended up skipping its 175 or so pages. This section details numerous business failures, divided into seven different categories of business action, the lessons that cost a billion dollars, as per the title. This first section is well written, but, I decided to skip to the second half “Avoiding the Same Mistakes” rather than skip the book. I’m not quite sure why, it might be that I’m much more interested in the process than specific events, in the same way that I’m interested in how you solve puzzles and play games, but not in solving puzzles or playing games. Also reading about so many failures is so disheartening, for a variety of reasons.

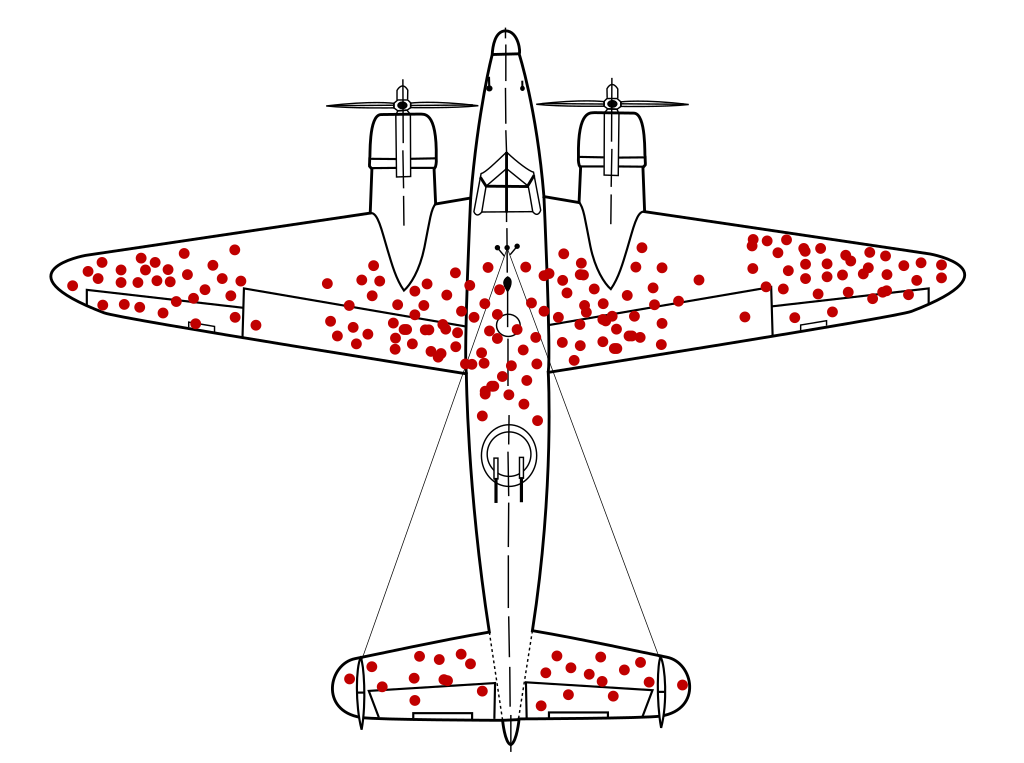

Of course, it’s good to see so many useful failures illuminated, to counter the Survivorship Bias that pervades business discourse in general; but this is still quite a trudge.

All pieces mentioning Survivorship Bias must include the "measles plane".

( if you’re not familiar with the idea of “Survivorship Bias” and the related story of the World War Two bomber armour do check out the Wikipedia page, where the significance of the “plane with a rash” will be explained )

I think the first half of the Billion Dollar Lessons is to convince the reader, through historical examples, that there’s a genuine problem here. Anyone who has a career of more than a decade, or has followed business new, doesn’t need to be convinced that there is a problem. At worst they want to learn how to avoid being impacted by such decisions, or at best they want to avoid making those poor decisions.

Applying those lessons

Throughout the rest of the book there are many valuable nuggets of information - well referenced and easy to cite - that illustrate how a common “business truth” has been disproved through analysis and experimentation. I’ve listed the ones I found most interesting below, to show you how useful this Billion Dollar Lessons could be:

In 1955, Solomon Asch published results from a series of experiments that demonstrated wonderfully the pressures to conform.

Asch’s experiments put a subject in with a group of seven to nine people he hadn’t met. Unknown to the subject, the others were all cooperating with the experimenters. An experimenter announced that the group would be part of a psychological test of visual judgment. The experimenter then held up a card with a single line on it, followed by a card with three lines of different lengths. Group members were asked, in turn, which line on the second card matched the line on the first. The unsuspecting subject was asked toward the end of the group. The differences in length were obvious, and everyone answered the question correctly for the first three sets of cards. After that, however, everyone in the group who was in on the experiment had been instructed to give a unanimous, incorrect answer. They continued to agree on a wrong answer from that point on, except for the occasional time when they’d been instructed to give the correct answer, to keep the subject from getting suspicious. As Asch put it, subjects were being tested to see what mattered more to them, their eyes or their peers.

The eyes had it, but not by much. Asch said that, in 128 runnings of the experiment, subjects gave the wrong answer 37 percent of the time. Many subjects looked befuddled. Some openly expressed their feeling that the rest of the group was wrong. But they went along.

Interestingly, Asch found that all it took was one voice of dissent, and the subject gave the correct answer far more frequently. If just one other person in the room gave the correct answer, the subject went along with the majority just 14 percent of the time - still high, but not nearly so bad.

That’s a great point on how groupthink can happen, and also on how useful it can be sometimes just to dissent, just to look for problems so that everyone rethinks their assumptions. This alludes to the Israeli Defence Force’s idea of the Tenth Man, the idea that it is someone’s specific responsibility to bring up issues with an idea or plan.

I’m a little wary of sharing this next point. It shows how inexpert experts can be. I should stress that I’m a fan of experts, and of course someone who doesn’t have expertise of a situation may not even be able to frame the possible choices to get them wrong within the same “ballpark”, as an expert would. But still, this kind of analysis shows the value in questioning expertise:

In The Wisdom of Crowds, James Surowiecki writes: “The between- expert agreement in a host of fields, including stock picking, livestock judging, and clinical psychology, is below 50 percent, meaning that experts are as likely to disagree as to agree. More disconcertingly, one study found that the internal consistency of medical pathologists’ judgments was just 0.5, meaning that a pathologist presented with the same evidence would, half the time, offer a different opinion. Experts are also surprisingly bad at what social scientists call ‘calibrating’ their judgments. If your judgments are well-calibrated, then you have a sense of how likely it is that your judgment is correct. But experts are much like normal people: They routinely overestimate the likelihood that they’re right.”

Studies show that experts are actually more likely to suffer from overconfidence than the rest of the world. After all, they’re experts.

My opinions on “epistemic trespassing”1 are too involved to go into here. Where expertise is and isn’t useful is a complex concept, and depends on the context and the expertise involved, but commentators usually apply generic hypotheticals.

A representation of bewildering options, picture by geralt on Pixabay.

I’ve been discussing Matt Mower’s AgendaScope service with him recently, on how it can help organisations actually make decisions rather than waiting for the decision to be made for them by external factors. I was reminded of that service, and the requirement for it, when reading this quotation in the book by Tom Watson Jr. of IBM, on the benefit of facing problems:

“I never varied from the managerial rule that the worst possible thing we could do would be to lie dead in the water with any problem. Solve it, solve it quickly, solve it right or wrong. If you solved it wrong, it would come back and slap you in the face, and then you could solve it right. Lying dead in the water and doing nothing is a comfortable alternative because it is without immediate risk, but it is an absolutely fatal way to manage a business.”

While I’m all for Devil’s Advocacy2 as a concept I can appreciate why it’s not more commonly used by organisations. Also I haven’t really seen anyone make a clear career success of it. This next quote is a great description of the problem with introducing devil’s advocates, people whose job it is to find issues with others’ proposed ideas:

In “The Essence of Strategic Decision Making”, Charles Schwenk reports that numerous field and laboratory research studies support the effectiveness of devil’s advocacy in improving organizational decision making. It improves analysis of data, understanding of a problem, and quality of solutions. In particular, devil’s advocacy seems most effective when tackling complex and ill-structured problems. It increases the quality of assumptions, increases the number of strategic alternatives examined, and improves decision makers’ use of ambiguous information to make predictions.

The question isn’t whether devil’s advocacy improves decisions. The question is how to introduce devil’s advocacy in a way that organizations can tolerate.

I think that a way around this resistance to devil’s advocacy is through wargaming and other simulations. In a wargame participants get to learn through their own action and decisions the likely outcome of their choices. This active learning can have a much greater impact than an expected result being explained or proven. This next quote shows the value of simulation, which especially ties in with my practice of running discussions or exercises for organisations:

More generally, we think that simulation is a valuable technique for prompting deeper discussions of assumptions and risks. Using simulation methods forces the odds of key assumptions to be quantified. And they force the organization to actually do the math about the overall probability of success. From high school math class, we know that if a plan depends on three things happening, the odds of the plan working are just the multiplication of the odds of each thing. So, if each has a 70 percent chance of happening, the odds of the whole plan working are just 0.7 x 0.7 x 0.7, or 0.34. In other words, just about one in three. While 70 percent sounds reasonable, one in three starts feeling dicey. But planners rarely make that kind of calculation.

Our experience is that the value of simulation doesn’t come so much from the final numbers. Executives rarely accept such calculations, especially if they run counter to their intuitions or desired actions. Instead, the value comes from engaging decision makers in the model itself. Forcing the conversation about the key assumptions and how they interact is where the real value lies.

The value of wargaming and exercises and simulating decision making ties in with the value of failure. Playing game-based versions of a situation allow you to accumulate “synthetic experience”, which means you can gain a greater feel for how a decision will work out, and explore the range of possible outcomes. If you need a quote for a twenty five second pitch on that, there’s a great quote from the start of the book’s Epilogue:

Robert A. Lovett, President Harry S. Truman’s defense secretary during much of the Korean War, observed, “Good judgment is usually the result of experience. And experience is frequently the result of bad judgment.”

A picture of Lovett, hosted on Wikipedia.

Overall modern business strategy seems to be “planning”. While there are as many definitions of strategy as there are strategists to make them, in every field where strategy applies, none of those definitions is solely “making a plan”. Yet mere planning seems to be what business strategy often comes down to - in a complex environment filled with allies and adversaries, a business will plan out a set of actions as though nothing will change in the next six to thirty six months. It has taken me some time to realise that I’m not missing something, business strategy is commonly over-simplistic. There’s a useful analogy for this in the book too:

Failed strategies sometimes fly like cannonballs. … Rather than cannonballs, strategies should be more like cruise missiles. Once strategies are launched, there should be mechanisms to gather feedback and continuously adapt the flight path to the terrain and strike the intended target.

One useful approach is to construct alarm systems around key conditions that must continue to hold for a plan to remain viable. An alarm system is a simple warning device that detects when a condition has changed and warrants attention. Applied to strategies, it might be nothing more than a list of critical success factors that are continuously monitored, to make sure that changes in the plan, key forecasts, or market conditions do not overtake previous calculations.

And so, after all of this, will this book help you set up, use, or explore some kind of Devil’s Advocacy function in your own organisation? Chapters Ten and Eleven lay out how you could achieve this, the pitfalls and the benefits. I particularly liked this chapters, calling for the right questions to be asked, rather than every critique having to come with a solution. I think coming up with the right questions can be a particular skill, which is often undervalued, so I was pleased to see this pushed to the fore:

Perhaps the hardest aspect of the devil’s advocate review for many to accept is that the goal is to deliver questions, not answers. Many corporate cultures espouse the attitude that one should not point to problems without also having answers. In general, planning processes try to produce the best answers. The devil’s advocate review will focus on generating the most illuminating questions. This helps to ensure that members of the review team do not push their own agenda or preconceived answers but, instead, focus on examining the strategy as presented. In this vein, the review team might well leave the patient chopped up on the table, if that is warranted. It shouldn’t try to sew it back up or even make it presentable. While this might sound harsh, it is important to head off any attempt to replicate the strategy process to provide " better" answers. That’s just not possible in the short window of the review. At the same time, good questions should lead to better answers.

Considering the book was originally published in 2008, I’m not sure how much difference it has made by 2023. But if you want to make better decisions, or want your organisation to make better decisions, I can’t recommend Billion Dollar Lessons highly enough.

-

Epistemic Trespassing is the idea that experts should “stay in their lane”, and that no-one external to an area of expertise should comment on that area. Its applicability is very context dependent. ↩︎

-

Devil’s Advocacy is the practice of deliberately looking for reasons against a decision or course of action. The term’s origin comes from the practice in the Roman Catholic church of arguing against the canonisation of a candidate when someone was proposed for sainthood. ↩︎